There is a My Oracle Support note, “Master Note for OLTP Compression [ID 1223705.1]”, which talks about the overheads of “COMPRESS FOR ALL OPERATIONS”. It’s a great note and, for a very artificial test, quotes overheads of around 25% CPU, 100% redo and 100% undo when inserting data via conventional methods. The purpose of this post is to visualise when the extra resources are used.

My test uses a SQL*Plus session that repeatedly inserts a single row into a table with “COMPRESS FOR ALL OPERATIONS” enabled. After each insert I use the SQL*Plus HOST command to capture redo and undo statistics for my session. I struggled to measure CPU for each insert. The “CPU used by this session” statistic was not granular enough for my single row inserts and the CPU tracked by SQL Trace was being rounded to 0ms or 1ms for each insert. However the execute elapsed attribute from SQL Trace did give me good granularity. Obviously SQL Trace has it’s own overheads as I’ve talked about before but I don’t think they are important in this case as I’m just after a visualisation of when extra resources are used.

The shell and SQL scripts used to capture statistics are at the foot of this post.

My SQL*Plus control session looks a little like this:

sqlplus neil

create table compteststats

( testid varchar2(10)

, snapid number

, statname varchar2(64)

, statval number);

create table compobj

( col1 varchar2(30)

, col2 number

, col3 varchar2(30)

, col4 varchar2(30)

, col5 number

, col6 varchar2(30))

compress for all operations;

exec dbms_application_info.set_module('TESTSESS','TESTSESS');

exec dbms_monitor.session_trace_enable

--capture starting values

host ./inscompstat.sh TEST1

insert into compobj values (lpad('w',30,'w'),1,lpad('x',30,'x'),lpad('y',30,'y'),2,lpad('z',30,'z'));

host ./inscompstat.sh TEST1

insert into compobj values (lpad('w',30,'w'),1,lpad('x',30,'x'),lpad('y',30,'y'),2,lpad('z',30,'z'));

host ./inscompstat.sh TEST1

-- repeat above insert/host combination until the first block is full and we move to a second

exit

I was then able to report redo and undo statistics using the SQL below

select snapid row# , max(decode(statname,'redo size',delta,0)) redo_size , max(decode(statname,'undo change vector size',delta,0)) undo_size , max(decode(statname,'HSC OLTP positive compression',delta,0)) HSC_OLTP_positive_comp , max(decode(statname,'HSC OLTP Compressed Blocks',statval,0)) HSC_OLTP_Compressed_Blocks from ( select testid,snapid,statname, statval ,statval-lag(statval) over (partition by testid,statname order by snapid) delta from compteststats where testid = 'TEST2' and snapid > 0 ) group by snapid order by snapid;

And I captured the elapsed time for each insert from the SQL Trace file using the grep/awk combo below. I then combined these values with the redo/undo figures in a spreadsheet.

grep "^EXEC #139771649698992" orcl1_ora_6987.trc | awk -F[,=] '{print $4}'

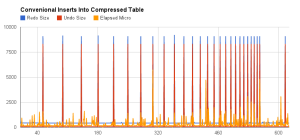

The chart produced from the spreadsheet looks like this, you can click to enlarge but get the gist from the small version.

The number of inserts is plotted on the X axis and the Y axis is either the value from v$sesstat or elapsed micro seconds from the trace file. The values are not important. What I found interesting was being able to visualise the life of the block. You can see the spikes in redo and undo each time the block is (re)compressed and as the block gradually fills up the compressions become more frequent until we get a lull in the compressions as we start to fill a second data block. The last spike on the chart is first compression of the second block.

Excerpts from my data is below. You can see the “HSC OLTP positive compression” has a delta of 1 for each spike demonstrating that the spike is caused by block compression.

ROW# REDO_SIZE UNDO_SIZE HSC_OLTP_POSITIVE_COMP HSC_OLTP_COMPRESSED_BLOCKS

---------- ---------- ---------- ---------------------- --------------------------

1 604 112 0 0

2 432 68 0 0

3 432 68 0 0

4 432 68 0 0

5 432 68 0 0

6 432 68 0 0

7 432 68 0 0

8 432 68 0 0

...

51 432 68 0 0

52 432 68 0 0

53 9092 8320 1 1

54 420 68 0 1

55 420 68 0 1

...

97 420 68 0 1

98 420 68 0 1

99 9152 8320 1 1

100 420 68 0 1

101 420 68 0 1

...

613 432 68 0 1

614 432 68 0 1

615 9092 8320 1 2

616 420 68 0 2

617 420 68 0 2

As stated previously – here are my scripts.

inscompstat.sh

sqlplus -S neil/neil <<SQLINP @inscompstat $1 exit SQLINP

inscompstat.sql

set verify off

insert into compteststats

(testid,snapid,statname,statval)

select '&1', c.maxsnap, s.name, s.value

from (

select n.name, s.value

from v$session u, v$sesstat s, v$statname n

where u.sid = s.sid

and s.statistic# = n.statistic#

and u.module = 'TESTSESS'

and n.name in ( 'redo size'

, 'undo change vector size'

, 'HSC OLTP positive compression'

, 'HSC OLTP Compressed Blocks')

) s

, (select nvl(max(snapid),-1)+1 maxsnap

from compteststats

where testid = '&1') c

;